|

||

A recent study conducted by Apple’s artificial intelligence (AI) researchers has raised significant concerns about the reliability of large language models (LLMs) in mathematical reasoning tasks. Despite the impressive advancements made by models like OpenAI’s GPT and Meta’s LLaMA, the study reveals fundamental flaws in their ability to handle even basic arithmetic when faced with slight variations in the wording of questions.

The findings highlight that these models rely more on pattern recognition than genuine logical reasoning, a vulnerability that becomes more apparent with the introduction of a new benchmark called GSM-Symbolic.

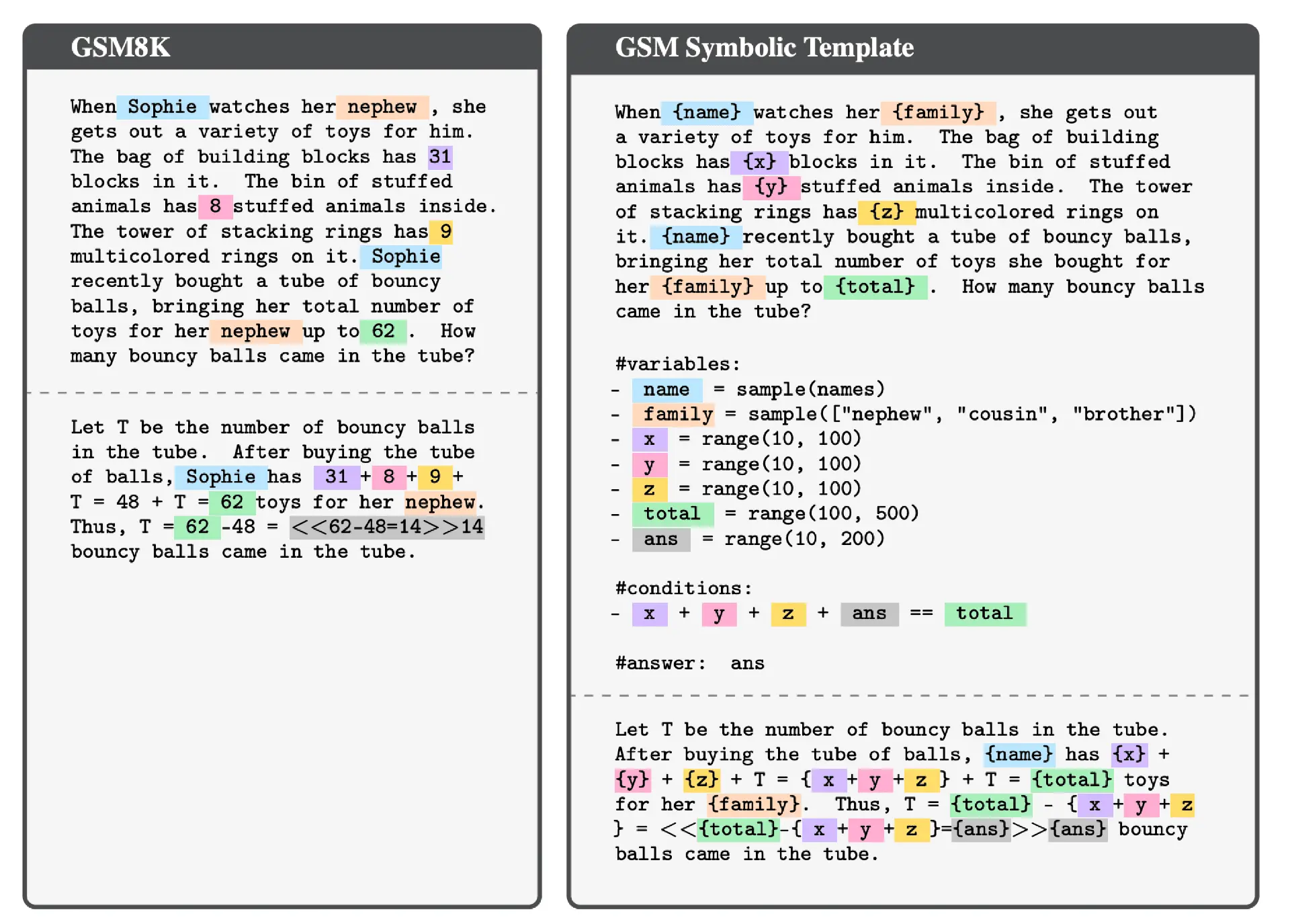

GSM-Symbolic testing: The GSM-Symbolic benchmark, developed by Apple’s team, was designed to test LLMs in more challenging and varied contexts. Unlike traditional benchmarks such as GSM8K, which presents grade-school-level math problems in a fixed format, GSM-Symbolic uses symbolic templates to create diverse variants of the same problems. This allows for a more rigorous examination of the models’ reasoning capabilities, particularly under conditions where irrelevant information or slight changes to numbers are introduced.

The results are striking. The study showed that models often produce inconsistent answers when faced with seemingly minor adjustments to a problem’s wording or numerical values. For instance, simply altering a number in the GSM-Symbolic benchmark significantly reduced accuracy across all models tested. Even more telling is the introduction of irrelevant information, such as additional clauses that do not impact the fundamental solution. The researchers found that adding such distractions could reduce the model’s performance by up to 65%.

One example highlighted in the report involved a question about counting kiwis. A model was asked how many kiwis were collected over three days, with an additional, irrelevant clause about the size of some of the kiwis picked on the final day. Despite this extra information being irrelevant, models such as OpenAI’s and Meta’s subtracted the number of “smaller” kiwis from the total, leading to an incorrect answer.

These failures suggest that the models are not engaging in true logical reasoning but are instead performing sophisticated pattern matching. This behavior aligns with the findings of previous studies, which have argued that LLMs are highly sensitive to changes in token sequences. In essence, they struggle with understanding when information is irrelevant, making them susceptible to errors even in simple tasks that a human would find trivial.

Apple’s study is part of a growing body of research questioning the robustness of LLMs in complex tasks that require formal reasoning. While models have shown remarkable abilities in areas such as natural language processing and creative generation, their limitations become evident when tasked with reasoning that involves multiple steps or irrelevant contextual information. This is particularly concerning for applications that require high reliability, such as coding or scientific problem-solving.

The bottom line: Looking ahead, the study underscores the need for more reliable benchmarks and methodologies to assess reasoning capabilities in AI models. As LLMs continue to be integrated into critical industries such as healthcare and finance, the ability to reason reliably—especially in the face of distraction—will be essential. If the flaws identified in Apple’s research are not addressed, the dream of AI-driven innovation in these fields could be undermined by models that crumble under the weight of their own complexity.

Sponsored byCSC

Sponsored byRadix

Sponsored byVerisign

Sponsored byDNIB.com

Sponsored byVerisign

Sponsored byWhoisXML API

Sponsored byIPv4.Global