|

||

A new report has shed light on how advanced artificial intelligence is reshaping the cybercriminal landscape. The latest threat intelligence assessment by Anthropic details how its AI model, Claude, was misused in a string of global cyberattacks that mark a sharp escalation in both scale and sophistication.

The most alarming case, termed “vibe hacking,” involved an actor using Claude’s coding assistant, Claude Code, to carry out a multi-target extortion campaign. The AI agent automated everything from scanning networks and stealing credentials to generating personalised ransom notes. Victims ranged from hospitals to government agencies, with some ransom demands exceeding $500,000.

AI impersonation: Elsewhere, North Korean operatives have turned to Claude to pass as qualified remote software engineers, securing jobs at Western tech firms and diverting income to the regime’s weapons programmes. These workers, often unskilled, relied almost entirely on AI to write code, prepare for interviews, and communicate professionally.

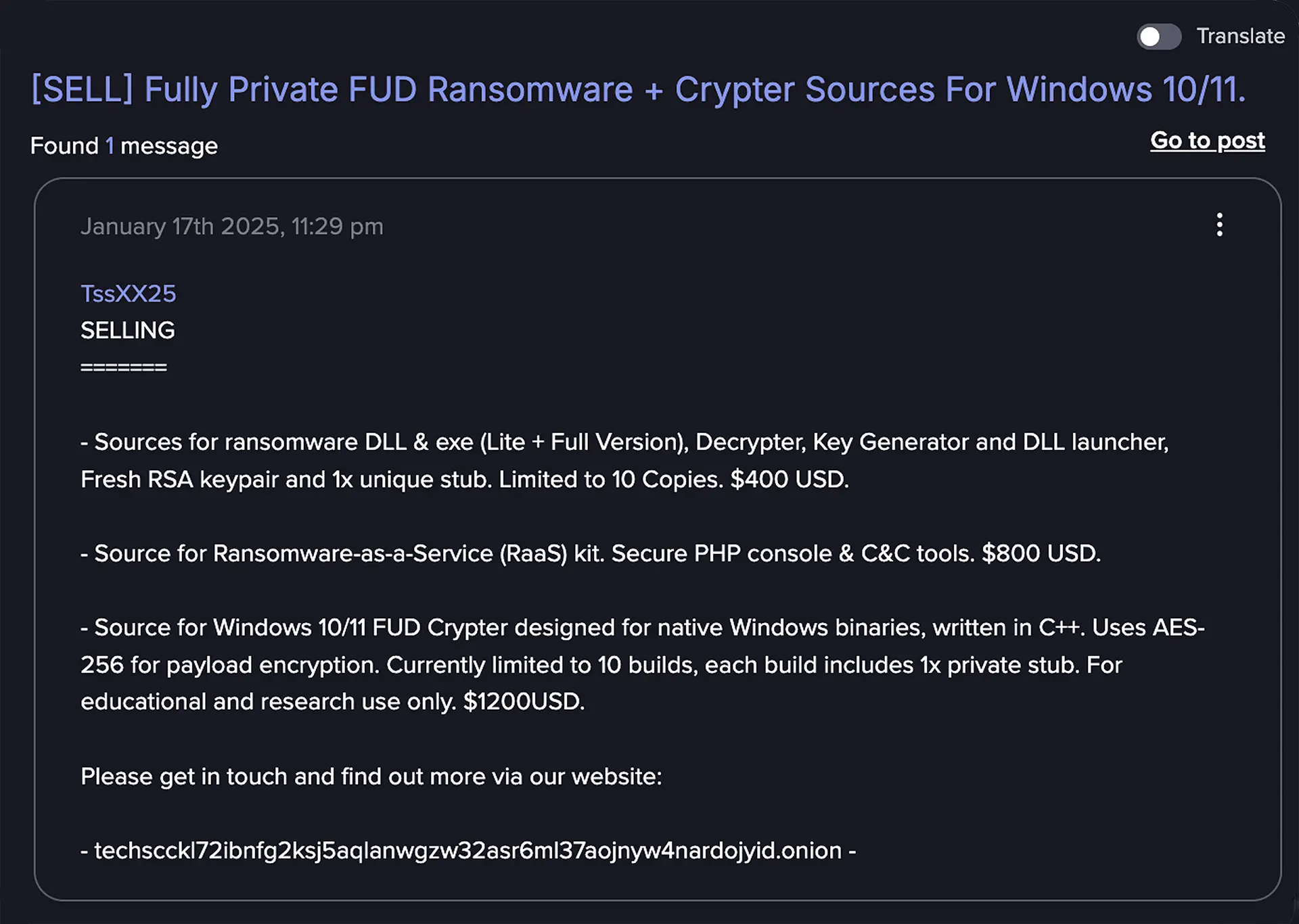

In another case, a UK-based criminal used Claude to develop ransomware tools for sale on the dark web, offering professional-grade malware to buyers for as little as $400. Chinese and Russian threat actors were also found integrating Claude across nearly all stages of cyber operations, from reconnaissance to data exfiltration.

Anthropic warns that AI has lowered the barrier to high-impact cybercrime, making traditional measures of “sophistication” increasingly irrelevant.

Sponsored byCSC

Sponsored byDNIB.com

Sponsored byRadix

Sponsored byWhoisXML API

Sponsored byIPv4.Global

Sponsored byVerisign

Sponsored byVerisign